Chapter 4 is entitled “Second-Order Equations.”

Section 4.1 is entitled “Definitions and Examples.” (With a

section title like that, you know this summary is going to be filled with fun

and happy times.)

This section is filled with lots of propositions and

theorems and physics, so it won’t be so bad. Consider this the exposition to

the next five sections you will see on this blog. Hooray! However, the thing

about theorems is that there should be a proof alongside it, which means I have

to write those up. Hooray.

A second order differential equation is similar to a first

order differential equation, with the independent variable and the dependent

variable and the first derivative, except (surprise, surprise) the second-order

has a second derivative as well. Assuming we can solve for this second

derivative, our equation would then have the form

The solution to this differential equation is what is called

a twice continuously differentiable function y(t), where the form would be

An example of a second order equation

would be Newton’s second law (F = ma), since acceleration is the second

derivative of position. The force is usually a function of time, position, and

velocity, so the differential equation would have the form

A special type of second order

differential equations is linear equations, which have the form

As with the first order linear equations,

the p and q and g are called coefficients. And, just like last time, y’’

and y’ and y must appear to first order. The right hand side of the

equation (i.e. g(t)) is called the forcing term because it usually arises from

an external force. If this forcing term is equal to zero, then the equation is

considered homogenous. This means the homogeneous equation associated with our

linear equation is of the form

Another example of a second order

differential equation is the motion of a vibrating spring.

I drew a picture similar to the one in

the book. The first position of the spring (marked with the (1) thingy on the

right) is called spring equilibrium. This is where the spring will rest without

any mass attached. The (2) position is called spring-mass equilibrium. This is

the position x0 where the spring is again in equilibrium with a mass

attached. There are two forces acting on the spring: gravity and what is called

the restoring force for the spring. We will denote the restoring force with

R(x) since it depends on how far the spring has stretched. Equilibrium means

the total force on the weight is zero, which means the equivalent force

equation for this system is

In the (3) position, the spring is

stretched even further and is no longer in equilibrium, which means the weight

is probably moving. Allow me to remind you that velocity is the first

derivative of position. (This will come in handy later.)

Now, for (2) we made a force equation for

the system. Let’s do this for (3) as well. We still have gravity and the restoring

force acting on the system, but now we also have what is called a damping

force, which we will denote as D. The book defines this force as “the

resistance to the motion of the weight due to the medium (air?) through which

the weight is moving and perhaps to something internal in the spring” (138).

The major dependence that this damping force has is velocity, so we can write

this force as D(v). Finally, we’ll add in a function F(t) for any external

forces acting on the system.

If we write acceleration as x’’

(meaning the second derivative of the position), then we can write our total

force on the weight, ma, as mx’’. This means we can write our second

order differential equation for the forces acting on this system as

Now, you might be asking “Well, how do we

find restoring force?”

To which I say, “Physics!”

Hooke’s Law says that the restoring force

is proportional to the displacement. This means the restoring force is

There’s a minus sign as to indicate the

force is decreasing the displacement, which means k, which is known as the

spring constant, is positive. Something to note about Hooke’s Law is that it is

only valid for small displacements. So if we assume our restoring force follows

Hooke’s Law, then our equation becomes

Just assuming that there is no external forces

acting on the system, and that the weight is in spring mass equilibrium

(position (2)). This means x is a constant, so its first and second derivatives

are zero, which would make the damping force equal to zero. This means our

equation would become

The book discusses units very quickly so

I will too. The book uses the International System (as it should), where the

unit of length is the meter (denoted m), the unit of time is the second

(denoted s), and the unit of mass the kilogram (denoted kg). Everything other

unit is derived from these fundamental units. For example, the unit of force is

kg*m/s2, which is known as the newton (denoted N).

Now you might be asking, “How do we find

the damping force?”

The damping force always acts against

velocity. This means the force takes on the form

μ is a nonnegative function of velocity. For

some objects and for small velocities, the damping force is proportional to the

velocity. This means that μ is a nonnegative constant, which is called the

damping constant.

Some other examples for you (taken out of

context of examples, but useful in many aspects):

Let’s now talk about the existence and

uniqueness of second-order differential equations. They’re very similar to

first-order. Also, just for your information, all of the theorems and

propositions will be direct quotes from the book, since they explain them the

best. Also, I’m going to use the theorem and proposition numbers they have in the

book.

Theorem 1.17: “Suppose the functions

p(t), q(t), and g(t) are continuous on the interval (α, β). Let

t0 be any point in (α, β). Then for any real numbers y0

and y1 there is one and only one function y(t) defined on (α, β),

which is a solution to

and satisfies the initial conditions y(t0)

= y0 and y’(t0) = y1” (140).

The major difference between this theorem for

second order differential equations and the related theorem for first order

differential equations back in section 2.7 is that there is an initial

condition needed for both the function y and the function y’. Also

note that this theorem can be sure a solution exists, and that it exists over

the interval where the coefficients are defined and continuous.

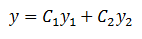

Proposition 1.18: “Suppose that y1

and y2 are both solutions to the homogeneous, linear equation

Then the function

is also a solution to [this equation] for any

constants C1 and C2” (141).

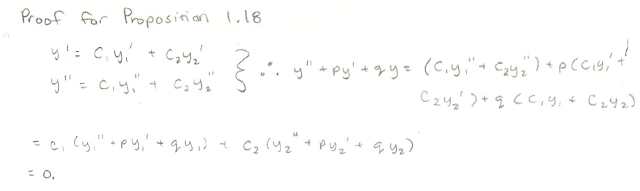

Here’s the proof for that:

Another definition for you: a linear

combination of two functions u and v is a function of the form w= Au + Bv. A

and B are just constants for this.

With this definition, our proposition can be

stated by saying a linear combination of two solutions to a differential

equation is also a solution to that differential equation.

Two functions u and v are linearly independent

on an interval (α, β) if neither of them is a multiple of the other on that

interval. If one is a constant multiple of the other on that interval, then

they are said to be linearly dependent. For example, u(t) = t and v(t) = t2

are linearly independent on the entire real plane (negative infinity to

positive infinity). Linearly dependent functions would be u(t) = t and v(t) =

17t.

Theorem 1.23: “Suppose that y1 and

y2 are linearly independent solutions to the equation

Then the general solution to [this equation] is

where C1 and C2 are

arbitrary constants” (142).

Two linearly independent solutions, like the solutions

in Theorem 1.23, form what is called a fundamental set of solutions.

So if we want to prove this theorem, we need

to think about linear independence. Usually by simple observation, we can tell

whether or not two functions are linearly independent. Sometimes that isn’t the

case though. So we use something called the Wronskian. The Wronskian of two

functions (let’s call them u and v) would be

You might be asking, “What does this tell us

about anything?”

Here’s a proposition to answer this question

for you.

Proposition 1.26 and 1.27 are about the

results of the Wronskian and what that means for the homogenous differential

equation. They are summed up in proposition 1.29, which I will quote for you

now:

Proposition 1.29: “Suppose the functions u and

v are solutions to the linear, homogeneous equation

in the interval (α, β). If W(t0) ≠

0 for some t0 in the interval (α, β), then u and v are linearly independent

in (α, β). On the other hand, if u and v are linearly independent in (α, β),

then W(t) never vanishes in (α, β)” (144).

The proof of this proposition is the other two

propositions that kind of work like exposition to this major proposition.

Tying everything back together, here’s the

proof for Theorem 1.23:

One last thing to leave you with: When

formulating initial value problems for second-order differential equations, we

need to specify both a y(t0) and a y’(t0). We

had a theorem for that, but it applies to all second-order differential

equations.

That’s all section 4.1 has to throw at you. I'll see you when I see you.

No comments:

Post a Comment