Section 9.2 is entitled “Planar Systems.”

I’ve been on a week-long hiatus and I decided that waiting

around wasn’t going to fix the fact that this section has pages and pages and

pages of notes. Now I realize that these pages are mostly examples. But, for

the most part, I’m going to be throwing a lot of quotes around concerning

theorems and propositions. Unfortunately, it’s one of those sections.

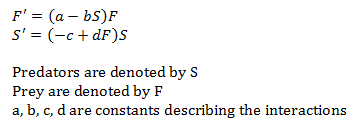

Planar systems are just linear systems in the 2nd dimension.

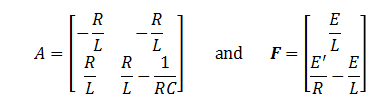

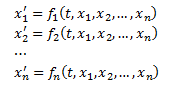

We would like to know how to solve the following systems:

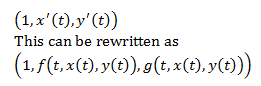

So recall back to the last section, where

we discussed eigenvalues λ and eigenvectors v with the solution y(t)

= eλtv. We know that eigenvalues are

solutions of the determinant of of A – λI set equal to zero. Let’s expand that

out:

This

is a quadratic answer, called the characteristic polynomial. Something to note

is that the constant term is the determinant of A (without the eigenvalue). For

sake of space, we’ll denote this as D (it probably stands for determinant).

Then there’s the coefficient of λ, which we’ll denote as T. If you’re in linear

algebra, you’ll notice this is the trace of A (or, in other words, the sum of

the diagonal entries of A). The trace of A can also be denoted as tr(A). This

means we can rewrite our equation of this planar system as

There

are three cases we must consider about this characteristic polynomial:

1.

Real roots (when T2 – 4D > 0)

2.

Complex roots (when T2 – 4D < 0)

3.

A real root of “multiplicity 2” (when T2 – 4D = 0)

Here’s

a proposition for you:

“Suppose

λ1 and λ2 are eigenvalues of an n × n matrix A. Suppose v1 ≠ 0 is an eigenvector for λ1 and v2 ≠ 0 is an

eigenvector for λ2. If λ1 ≠ λ2, then v1 and v2 are linearly independent” (379).

Something

really nice to note from this is that if v1

and v2 are linearly

independent, then

I

wanted to actually write that, but there’s only so much that can be done

without the equation editor.

Something

nice to note about both of these linearly independent conclusions is that the

eigenvalues and eigenvectors don’t have to be real for these propositions to be

true. If one or more is complex, then the results are still true. Hooray!

Here’s

a theorem for you:

“Suppose

that A is a 2 × 2 matrix with real eigenvalues λ1 ≠ λ2.

Suppose that v1 and v2 are eigenvectors

associated with the eigenvalues. Then the general solution to the system y’ = Ay is

where

C1 and C2 are arbitrary constants” (380).

In

the case of complex eigenvalues, T2 – 4D < 0, which would make

the square root full of negative-ness, which was a big no-no taught to us on

the first day of square roots. However, we can package that negative-ness with

the lovely letter i, so our complex roots

can look like

This

means we’ll have a complex matrix, which I’ll just leave some websites here if

you need a refresher on the subject:

Here’s

a theorem followed by a proposition for you regarding the topic of complex

conjugate eigenvalues:

NOTE:

You may or may not wonder why I changed one of the eigenvalues from what the

book had originally. This is because the book used an overlined λ (also said as

“λ bar”) and this will not show up on the website. So I changed it to a strikethrough,

which is basically an overline, but moved down a little bit.

“Suppose

that A is a 2 × 2 matrix with complex conjugate eigenvalues λ and [λ].

Suppose that w is an eigenvector

associated with λ. Then the general solution to the system y’ = Ay is

where

C1 and C2 are arbitrary constants” (383).

And

the proposition:

“Suppose

A is an n × n matrix with real coefficients, and suppose that z(t) = x(t) + iy(t) is a solution to the system z’ = Az.

(a)

The complex conjugate [z] = x

– iy is also a solution to [this system].

(b)

The real and imaginary parts x and y are also solutions to [this system].

Furthermore, if z and [z] are linearly independent, so

are x and y” (383).

So

when we have a complex eigenvalue, it will be of the form λ = α + iβ, and its associated eigenvector will

be of the form w = v1 + iv2. When we

have a 2 × 2 matrix A, the solution of the system y’ = Ay will be of the

form

where

C1 and C2 are arbitrary constants” (384).

So

now we’re left with the final case for our eigenvalues – the multiplicity 2 one

(which is literally called “the easy case” in the book). If this multiplicity

nonsense is the case, our characteristic polynomial will be

In

this case, λ1 is T/2 and it has multiplicity 2. This was about the

time when I finally realized that multiplicity means something and I don’t

think we ever went over it so here’s a thing for you to understand it (like I

now do):

From

this there are two subcases, depending on the dimension of the eigenspace of λ.

Since the eigenspace is a subspace of R2,

the dimension can only be 1 or 2.

If

the dimension is 2, then the eigenspace must be equal to all of R2. This means every vector

is an eigenvector, so Av = λ1v for all v in R2. For

example, if we had

Here’s

a theorem concerning the other subcase, where the eigenvalue has λ of multiplicity

2 and eigenspace of dimension 1.

“Suppose

that A is a 2 × 2 matrix with one eigenvalue λ of multiplicity 2, and suppose

that the eigenspace of λ has dimension 1. Let v1 be a nonzero eigenvector, and choose v2 such that (A – λI)v2 = v1. Then

form

a fundamental set of solutions to the system x’ = Ax” (388).

This

means the general solution can be written as

Finally, here's one more website concerning this material that I didn't quite know where to put in this summary:

All

right, that’s it for section 9.2! It wasn’t as bad as I thought it was going to

be.

I’ve

been ill for the past couple of days, so I’m really foggy right now. I feel

pretty good about all this, but if something is blatantly wrong and I don’t

pick up on it for a while, then I’m really sorry about that.

I’ll

see you when I see you.